Welcome

In an age where innovation moves at lightning speed, it’s easy to be left behind. But fear not, tech enthusiast! Dive deep with us into the next 5-10 years of technological evolution. From AI advancements, sustainable solutions, cutting-edge robotics, to the yet-to-be-imagined, our mission is to unravel, decode, and illuminate the disruptive innovations that will redefine our world.

Molecular Mayhem: Can We Handle the Power of Real-Space Computations?

A scientist manipulates complex molecular simulations through a holographic interface, showcasing the future of molecular research. Time-Dependent Hartree-Fock (TDHF) in real space represents a groundbreaking shift in how we understand and manipulate the molecular world. Traditional methods, reliant on Gaussian-type orbitals, have provided significant insights but often at a prohibitive computational cost, especially for large molecules. The implementation of TDHF using the Octopus real-space code not only offers a fresh perspective but also enhances the scalability and flexibility of molecular simulations. This technology allows for systematic convergence of states and effective parallelization, essential for tackling complex molecular systems. The ability to model Rydberg states more accurately and efficiently is particularly transformative, offering new avenues for research in optical properties and beyond. Advancements in Computational Methods The introduction of Adaptively Compressed Exchange (ACE) in the Octopus code marks a significant improvement in computational efficiency. By compressing the exchange operator, ACE

The Voice in the Room: How Voice-Activated Assistants Are Reshaping Our Daily Lives

The Invisible Presence of AI Assistants. In the not-so-distant past, the notion of conversing with a machine was the stuff of science fiction, a fantasy confined to the pages of an Asimov novel or the screen of a Spielberg flick. Fast forward to today, and we’re living in a world where talking to a device is not just real; it’s mundane. The voice-activated virtual assistants — the Siris, Alexas, and Google Assistants of the world — are no longer novel curiosities; they’re as ubiquitous as the smartphone in your pocket or the microwave in your kitchen. These digital assistants, powered by artificial intelligence (AI), are transforming our daily routines in ways both overt and subtle, tangible and imperceptible. They are not just tools; they are the new members of our households, our tireless, uncomplaining secretaries, our ever-ready helpers. And like all technology that integrates seamlessly into our lives, they are

Depth Detectives: Unraveling the Excitement and Perils of Monocular Depth Estimation

Advanced medical diagnostics using monocular depth estimation technology in a modern hospital setting. Monocular depth estimation is a cutting-edge technology that allows machines to perceive the world in three dimensions using a single lens. This fascinating advancement mimics human depth perception, enabling computers to understand space and object placement with remarkable precision. Imagine a world where autonomous vehicles can accurately gauge distances using just one camera, or smartphones that can create 3D models from any scene. This technology has the potential to revolutionize how we interact with our devices, making our digital experiences more immersive and intuitive. Revolutionizing Industries with Advanced Vision Monocular depth estimation is not just a scientific achievement; it’s a game-changer for several industries. In healthcare, for example, this technology could transform diagnostic imaging, allowing for non-invasive exploration of the human body in 3D, enhancing the precision of surgeries. In the realm of entertainment, filmmakers and game developers

The Hidden Power of Next-Token Rewards in Large Language Models

Let’s start with this: large language models (LLMs) are impressive, sure, but up until now, they’ve been a bit like grandmasters in chess — stuck with a static playbook, unable to adjust on the fly without a costly retraining regimen. Enter GenARM, which isn’t just some incremental improvement; it’s a radical shift. GenARM introduces real-time decision-making, where a model can be guided by next-token feedback without needing a total reset. Imagine you could teach a chess master mid-game rather than between matches. What this means for machine learning isn’t just faster adaptation; it’s opening a world where AI can evolve in real-time with us, shifting the entire paradigm from fixed to fluid. Next-Token Rewards: A Revolution in the Making Now, if we break down what makes GenARM so intriguing, it comes down to something deceptively simple: the next-token reward model. Traditional models evaluate responses only after an entire sentence or even a paragraph

Beyond the Robot’s Reach: Revolutionizing Manipulation Learning with Human Data

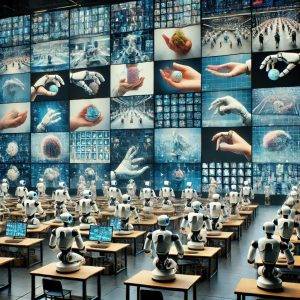

In a world where robots are still learning to understand and mimic human behavior, a groundbreaking project named EgoMimic stands out. EgoMimic is no ordinary tool; it’s an entire framework that captures, aligns, and scales human actions as seen from a first-person view — essentially giving robots a new vantage point on human dexterity and nuance. This isn’t about simple gestures or basic tasks but about refining how robots learn from the rich, often unnoticed intricacies of human hand movements. By capturing human actions through a pair of advanced “smart glasses” (Meta’s Project Aria), EgoMimic scales up imitation learning in ways previously unimaginable. This framework doesn’t just rely on large datasets of robotic motions; it lets robots learn from human experiences directly, merging two worlds. The result? A system where adding an hour of human demonstration can be exponentially more impactful than traditional robot-only learning. EgoMimic aims not just for robotic mimicry

Quantum Quirks: How the Secrets of Light Could Redefine Danger and Discovery

The Heart of Light Manipulation: A spatial light modulator in action, showcasing the beauty of controlled light phases. Imagine being able to manipulate the very fabric of light, correcting its imperfections to reveal a world that is not just invisible to the naked eye, but unimaginable to the human mind. This isn’t just a scene from a sci-fi movie; it’s real science happening right now through optical phase aberration correction using ultracold quantum gases. This technology harnesses the bizarre and fascinating behaviors of quantum particles, which exist at temperatures near absolute zero, to fix distortions in light waves. These particles act as ultra-sensitive sensors that detect and correct minute errors in optical systems, errors that would otherwise be undetectable. Such precision opens up new possibilities for scientific exploration and technological advancement. The Heart of the Quantum Sensor At the heart of this cutting-edge technology is the experimental setup — a symphony of lasers,

Categories

Recent Posts

- Cracking the Code of Motion: The AI That Constructs Skeletons from Chaos 02/23/2025

- AI’s New Gamble: Can Diffusion Models Overtake Autoregressive Giants? 02/23/2025

- When Mathematics Speaks in Code: The Search for an Explicit Formula 02/21/2025

- Beyond Reality: How AI Reconstructs Light, Shadow, and the Unseen 02/09/2025

- The Secret Language of Numbers: Counting Number Fields with Unseen Forces 02/08/2025

Sustainability Gadgets

Legal Disclaimer

Please note that some of the links provided on our website are affiliate links. This means that we may earn a commission if you click on the link and make a purchase using the link. This is at no extra cost to you, but it does help us continue to provide valuable content and recommendations. Your support in purchasing through these links enables us to maintain our site and continue to offer our audience valuable insights and information. Thank you for your support!

The Future of Everything

Archives

- February 2025 (9)

- January 2025 (19)

- December 2024 (18)

- November 2024 (17)

- October 2024 (18)

- September 2024 (17)

- August 2024 (18)

- July 2024 (17)

- June 2024 (29)

- May 2024 (66)

- April 2024 (56)

- March 2024 (5)

- January 2024 (1)

Technology Whitepapers

Share

Favorite Sites

- Building connected data ecosystems for AI at scale

- The Download: our bodies’ memories, and Traton’s electric trucks

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. How do our bodies remember? “Like riding a bike” is shorthand for the remarkable way that our bodies remember how to move. Most of the time when we talk about muscle memory, we’re…

- How do our bodies remember?

MIT Technology Review Explains: Let our writers untangle the complex, messy world of technology to help you understand what’s coming next. You can read more from the series here. “Like riding a bike” is shorthand for the remarkable way that our bodies remember how to move. Most of the time when we talk about muscle memory, we’re…

- This test could reveal the health of your immune system

Attentive readers might have noticed my absence over the last couple of weeks. I’ve been trying to recover from a bout of illness. It got me thinking about the immune system, and how little I know about my own immune health. The vast array of cells, proteins, and biomolecules that works to defend us from…

- The Download: mysteries of the immunome, and how to choose a climate tech pioneer

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. How healthy am I? My immunome knows the score. Made up of 1.8 trillion cells and trillions more proteins, metabolites, mRNA, and other biomolecules, every person’s immunome is different, and it is constantly…